Replacing Kapacitor

It's possible to replace Kapacitor with Quix. The basic process steps are:

- Use Quix's prebuilt connectors to connect to InfluxDB v2 or InfluxDB v3 databases, as required.

- Implement your Flux or TICKscript tasks in Python, using the Quix Streams client library.

The code you create to query and process your InfluxDB data runs in Quix, and has all the flexibility of using Python. Quix Streams also includes many common processing facilities, such as aggregations, windowing, filtering, and so on, reducing the amount of code you need to write.

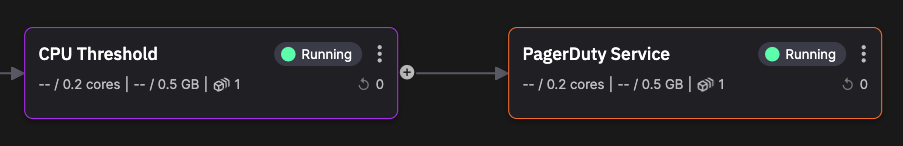

The following illustrates a typical processing pipeline running in Quix.

Kapacitor / Quix feature comparison

| Feature | Kapacitor | Quix |

|---|---|---|

| Streaming data processing | Kapacitor enables users to process streaming data in real-time, enabling actions to be taken as soon as data is received. | Quix was built from the ground up as a stream processing platform. |

| Data processing tasks | Kapacitor supports a variety of data processing tasks such as downsampling, aggregations, transformations, and anomaly detection. These tasks can be defined using TICKscript, a domain-specific language (DSL) specifically designed for Kapacitor. | Quix uses Python as its main programming language. Code is implemented using the Quix Stream Python stream processing client library. Quix Streams has many built-in stream processing features such as aggregations, windowing, and filtering. Read the guide on how to replace Flux/TICKscript with Quix Streams. |

| Alerting and monitoring | Kapacitor enables users to define alerting rules based on predefined conditions or anomalies detected in the data. Alerts can be sent through various channels such as email, Slack, PagerDuty, etc. | Quix supports alerts by writing code in Python to detect events and determine if thresholds have been crossed. Connectors to alerting services are provided, or can easily be added with minimal code. For a detailed example read the InfluxDB alerting with Quix Streams and PagerDuty tutorial. |

| Integration with InfluxDB | Kapacitor seamlessly integrates with InfluxDB, enabling users to perform advanced analytics and processing on the time-series data stored in InfluxDB. | Quix connects to both InfluxDV v2 and InfluxDB v3 using prebuilt connectors. The v3 connectors include both source and sink. For v2, only source is currently available. If you want to migrate data from InfluxDB v2 to InfluxDB v3 then read the tutorial. |

| Scalability | Kapacitor is designed to be horizontally scalable, enabling it to handle large volumes of data and scale alongside the rest of the TICK Stack components. | Quix was designed to be both vertically and horizontally scalable. It is based on Kafka, using either a Quix-hosted broker, or an externally hosted broker. This means all the horizontal scaling features of Kafka, such as consumer groups, is built into Quix. Quix also enables you to configure the number of replicas, RAM, and CPU resources allocated on a per-service (deployment) basis, for accurate vertical scaling. |

| High availability | Kapacitor supports high availability setups to ensure uninterrupted data processing and alerting even in the case of node failures. | As Quix uses a Kafka broker (including Kafka-compatible brokers such as Redpanda), it has all the high availability features inherent in a Kafka-based solution. In addition, Quix uses a Kubernetes cluster to seamlessly distribute and manage containers that execute your service's Python code. |

| Replay and backfilling | Kapacitor enables users to replay historical data or backfill missing data, enabling them to analyze past events or ensure data consistency. | Quix leverages Kafka's retention capabilities for data backfilling and analysis. You can process historical data stored in Kafka topics using standard Kafka consumer patterns. This is useful for testing and evaluating processing pipelines, and examining historical data. This is also enhanced by the ability to connect to external tools using Quix connectors. |

| Extensibility | Kapacitor provides an extensible architecture, enabling users to develop and integrate custom functions, connectors, and integrations as per their specific requirements. | Quix is fully extensible using Python. Complex stream processing pipelines can be built out one service at a time, and then deployed with a single click. It is also possible to use a wide range of standard connectors to connect to a range of third-party services. Powerful integrations extend these capabilities. In addition, REST and real-time APIs are available for use with any language that supports REST or WebSockets. As Quix is designed around standard Git development workflows, it enables developers to collaborate on projects. |

See also

Read the general overview of Quix.

If you are new to Quix you could try our Quickstart and then complete the Quix Cloud Tour. This gives you a good overview of how to use Quix, for a minimal investment in your time.

You can read more about stream processing.

You can read more about Kafka in our What is Kafka? documentation.

You can read the guide on Replacing Flux with Quix Streams, which includes some common use case examples.

The predictive maintenance tutorial provides a large scale example of what you can do with Quix, Quix Streams, and InfluxDB.

.png)