Quix Streams—a reliable Faust alternative for Python stream processing

A detailed comparison between Faust and Quix Streams covering criteria like performance, coding experience, features, integrations, and product maturity.

Nowadays, Python is the most popular programming language in the world for data science, analytics, data engineering, and machine learning. These use cases often require collecting and processing streaming data in real time.

Stream processing technologies have traditionally been Java-based. However, since many of Python’s real-world applications are underpinned by streaming data, it’s no surprise that an ecosystem of Python stream processing tools is emerging.

Faust, PySpark, and PyFlink are arguably the most well-known Python stream processors available today, as they have been around for the longest. Although, to be fair, of these three, Faust is the only pure Python stream processing engine with a client-side framework. Meanwhile, PySpark and PyFlink are wrappers around server-side Java engines (Apache Spark and Apache Flink). This can lead to additional complexity — check out our PyFlink deep dive to learn more about the challenges of building and deploying PyFlink pipelines.

In any case, Faust, PySpark, and PyFlink are not the only options available for Python stream processing. Newer, next-generation alternatives have started to appear over the last couple of years, with Quix Streams being a prime example.

This article explores why Quix Streams is a viable alternative to Faust for Python stream processing. We’ll compare them based on the following criteria:

- Coding experience

- Performance

- Ease of use

- Stream processing features

- Integrations and compatibility

- Maturity and support

Faust overview

Originally developed at Robinhood, Faust is an open-source Python stream processing library that works in conjunction with Apache Kafka.

Faust is used to deliver distributed systems, real-time analytics, real-time data pipelines, real-time ML pipelines, event-driven architectures, and applications requiring high-throughput, low-latency message processing (e.g., fraud detection apps and user activity tracking systems).

Key Faust features and capabilities

- Supports both stream processing and event processing

- No DSL

- Works with other Python libraries, such as NumPy, PyTorch, Pandas, Django, and Flask

- Uses Python async/await syntax

- High performance (a single worker instance is supposedly capable of processing tens of thousands of events every second )

- Supports stateful operations (such as windowed aggregations) via tables

- Highly available and fault tolerant by design, with support for exactly-once semantics (at least in theory)

Faust certainly offers an appealing set of features and capabilities. But some users have reported drawbacks, too. For example, Kapernikov (a software development agency) assessed several stream processing solutions for one of their projects, including Faust. However, Faust was ultimately disqualified — you can read this article written by Frank Dekervel and published on the Kapernikov blog to find out the reasoning behind this decision (in a nutshell, the Kapernikov team concluded that Faust can be unreliable, exactly-once processing doesn’t always work as expected, the code can be verbose, and testing can be problematic).

Another example — in a tutorial published on Towards Data Science, the author, Ali Osia (CTO and Co-Founder at InnoBrain) mentions that “Faust’s documentation can be confusing, and the source code can be difficult to comprehend”.

It’s also concerning that Robinhood has abandoned Faust (it’s unclear why exactly). There is now a fork of Robinhood’s original Faust repo which is maintained by the open source community. However, it has many open issues, and without any commercial backing or significant ongoing community involvement, it’s uncertain whether Faust will continue to mature (and to what extent).

Quix Streams overview

Quix Streams is an open-source, cloud-native library for data streaming and stream processing using Kafka and pure Python. It’s designed to give you the power of a distributed system in a lightweight library by combining Kafka's low-level scalability and resiliency features with an easy-to-use Python interface.

Quix Streams enables you to implement high-performance stream processing pipelines with numerous real-world applications, including real-time machine learning, predictive maintenance, clickstream analytics, fraud detection, and sentiment analysis. Check out the gallery of Quix templates and these tutorials to see more concrete examples of what you can build with Quix Streams.

Key Quix Streams features and capabilities

- Pure Python (no JVM, no wrappers, no DSL, no cross-language debugging)

- No orchestrator, no server-side engine

- Compatible with various Kafka brokers: Apache Kafka brokers version 0.10 or later, Quix Cloud-managed brokers, and Confluent Cloud, Redpanda, Aiven, and Upstash brokers

- Streaming DataFrame API (similar to pandas DataFrame) for tabular data transformations

- Easily integrates with the entire Python ecosystem (pandas, scikit-learn, TensorFlow, PyTorch, etc.)

- Support for many serialization formats, including JSON (and Quix-specific)

- Support for stateful operations (using RocksDB)

- Support for aggregations over tumbling and hopping time windows

- At-least-once Kafka processing guarantees

- Designed to run and scale resiliently via container orchestration (Kubernetes)

- Easily runs locally and in Jupyter Notebook for convenient development and debugging

- Seamless integration with the fully managed Quix Cloud platform

Faust vs Quix Streams: A head-to-head comparison

Now that we’ve introduced Faust and Quix Streams, it’s time to see how these two Python stream processors compare based on criteria like performance, ease of use, coding experience, feature set, and integrations. These are all essential factors to consider before choosing the stream processing technology that’s best suited for your needs.

Coding experience

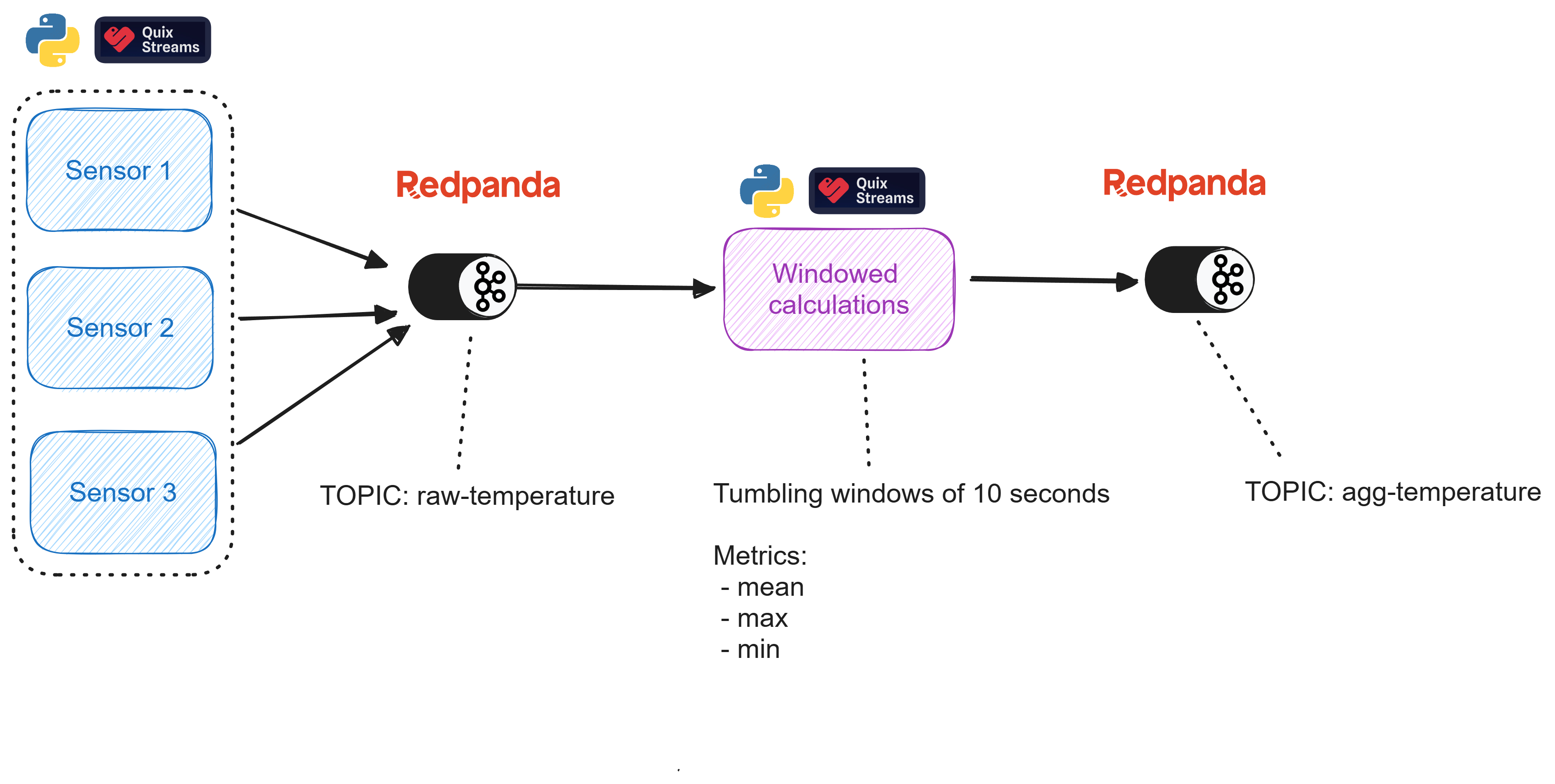

I’ll start by comparing the coding experience when using Quix Streams and Faust. Specifically, I’ll show you how to use these two solutions to calculate the rolling aggregations of temperature readings coming from various sensors. While Quix/Faust acts as the stream processor in our example, Redpanda is the (Kafka-compatible) streaming data platform used to:

- Collect raw sensor readings and serve them to Quix Streams/Faust for processing.

- Store the results of temperature aggregations once they have been calculated by the stream processing component.

Quix Streams code example

Here’s how to install Quix Streams:

pip install quixstreams

And here’s the stream processing logic:

# ADD DEPENDENCIES

import os

import random

import json

from datetime import datetime, timedelta

from dataclasses import dataclass

import logging

from quixstreams import Application

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(__name__)

# DEFINE VARIABLES

TOPIC = "raw-temperature" # Redpanda input topic

SINK = "agg-temperature" # Redpanda output topic

WINDOW = 10 # defines the length of the time window in seconds

WINDOW_EXPIRES = 1 # defines, in seconds, how late data can arrive before it is excluded from the window

# DEFINE QUIX APPLICATION

app = Application(

broker_address='localhost:19092',

consumer_group="quix-stream-processor",

auto_offset_reset="earliest",

# DEFINE REDPANDA INPUT AND OUTPUT TOPICS AND CREATE FUNCTION TO TRANSFORM INCOMING DATA INTO A DATAFRAME

)

input_topic = app.topic(TOPIC, value_deserializer="json")

output_topic = app.topic(SINK, value_serializer="json")

sdf = app.dataframe(input_topic)

sdf = sdf.update(lambda value: logger.info(f"Input value received: {value}"))

# INITIALIZE AGGREGATION

def initializer(value: dict) -> dict:

value_dict = json.loads(value)

return {

'count': 1,

'min': value_dict['value'],

'max': value_dict['value'],

'mean': value_dict['value'],

}

# AGGREGATION LOGIC

def reducer(aggregated: dict, value: dict) -> dict:

aggcount = aggregated['count'] + 1

value_dict = json.loads(value)

return {

'count': aggcount,

'min': min(aggregated['min'], value_dict['value']),

'max': max(aggregated['max'], value_dict['value']),

'mean': (aggregated['mean'] * aggregated['count'] + value_dict['value']) / (aggregated['count'] + 1)

}

# DEFINING TUMBLING WINDOW FUNCTIONALITY

### Define the window parameters such as type and length

sdf = (

# Define a tumbling window of 10 seconds

sdf.tumbling_window(timedelta(seconds=WINDOW), grace_ms=timedelta(seconds=WINDOW_EXPIRES))

# Create a "reduce" aggregation with "reducer" and "initializer" functions

.reduce(reducer=reducer, initializer=initializer)

# Emit results only for closed 10 second windows

.final()

)

### Apply the window to the Streaming DataFrame and define the data points to include in the output

sdf = sdf.apply(

lambda value: {

"time": value["end"], # Use the window end time as the timestamp for message sent to the 'agg-temperature' topic

"temperature": value["value"], # Send a dictionary of {count, min, max, mean} values for the temperature parameter

}

)

# PRODUCE THE RESULTS TO REDPANDA OUTPUT TOPIC

sdf = sdf.to_topic(output_topic)

sdf = sdf.update(lambda value: logger.info(f"Produced value: {value}"))

if __name__ == "__main__":

logger.info("Starting application")

app.run(sdf)

Quix Streams offers a high-level abstraction over Kafka with pandas DataFrame-like operations (read this article to learn more about the Quix Streaming DataFrame API). This declarative syntax enhances readability and reduces code length, providing a streamlined experience to Python developers.

Note that this was a short, simplified demonstration focusing strictly on Quix Streams as a stream processor. For the complete, extended version of this use case, please refer to the “Aggregating Real-time Sensor Data with Python and Redpanda” blog post published by my colleague and Quix CTO, Tomáš Neubauer in Towards Data Science. That blog post contains additional details and helpful context, including how to set up Redpanda and run the stream processing pipeline.

Faust code example

Once you’ve installed Faust, here’s how to define the stream processing logic:

# ADD DEPENDENCIES

import os

import random

from datetime import datetime, timedelta

from random import randint

import faust

# DEFINE VARIABLES

TOPIC = "raw-temperature"

SINK = "agg-temperature"

TABLE = "tumbling-temperature"

CLEANUP_INTERVAL = 1.0

WINDOW = 10 # 10 seconds window

WINDOW_EXPIRES = 1

PARTITIONS = 1

# DEFINE FAUST APPLICATION

app = faust.App(

"temperature-stream",

broker="kafka://localhost:59894",

)

# DEFINE SCHEMA OF INPUT AND OUTPUT RECORDS

### Input records

class Temperature(faust.Record, isodates=True, serializer="json"):

ts: datetime = None

value: int = None

### Output records

class AggTemperature(faust.Record, isodates=True, serializer="json"):

ts: datetime = None

count: int = None

mean: float = None

min: int = None

max: int = None

# DEFINE REDPANDA INPUT (SOURCE) AND OUTPUT (SINK) TOPICS

Source = app.topic(TOPIC, value_type=Temperature)

sink = app.topic(SINK, value_type=AggTemperature)

# ADD WINDOWING FUNCTIONALITY

WINDOW = 10 # 10 seconds window

WINDOW_EXPIRES = 1

PARTITIONS = 1

tumbling_table = (

app.Table(

TABLE,

default=list,

key_type=str,

value_type=Temperature,

partitions=PARTITIONS,

on_window_close=window_processor,

)

.tumbling(WINDOW, expires=timedelta(seconds=WINDOW_EXPIRES))

.relative_to_field(Temperature.ts)

)

# ROLLING AVERAGE COMPUTATION

def window_processor(key, events):

timestamp = key[1][0] # key[1] is the tuple (ts, ts + window)

values = [event.value for event in events]

count = len(values)

mean = sum(values) / count

min_value = min(values)

max_value = max(values)

aggregated_event = AggTemperature(

ts=timestamp, count=count, mean=mean, min=min_value, max=max_value

)

print(

f"Processing window: {len(values)} events, Aggreged results: {aggregated_event}"

)

sink.send_soon(value=aggregated_event) # sends the aggregation result to the destination topic

# DEFINE PROCESSING FUNCTION THAT WILL BE TRIGGERED WHEN A MESSAGE IS RECEIVED ON THE INPUT (SOURCE) TOPIC

@app.agent(app.topic(TOPIC, key_type=str, value_type=Temperature))

async def calculate_tumbling_temperatures(temperatures):

async for temperature in temperatures:

value_list = tumbling_table["events"].value()

value_list.append(temperature)

tumbling_table["events"] = value_list

Here are a few key takeaways:

- Faust provides high-level abstractions like tables and agents and agents that simplify the development of distributed stream processing applications using Kafka and Python.

- Faust supports asynchronous operations (it uses Python’s native async/await syntax).

- Faust aims to port many of the concepts and capabilities offered by Kafka Streams (a Java-based technology) to Python.

Note that the snippet above is a simplified demonstration, focusing exclusively on Faust. For a complete, end-to-end example that discusses the Faust code in detail and offers instructions on how to set up Redpanda, see the “Stream processing with Redpanda and Faust” blog post.

Stream processing features

Here’s how Quix Streams and Faust compare when it comes to core stream processing functionality:

Both Quix Streams and Faust offer diverse capabilities for processing and transforming streaming data. Quix Streams is in the process of expanding its capabilities in the future (stream joins, aggregations over sliding windows, and exactly-once processing guarantees are some of the planned improvements). Meanwhile, it’s unclear if and when new stream processing capabilities will be added to Faust — I couldn't find any public roadmap to refer to.

Essentially, Quix Streams offers rich capabilities and is evolving with promising enhancements on the horizon, while Faust offers an established toolkit with some practical limitations (around exactly-once processing, for example).

Performance and reliability

Regarding Faust: unfortunately, there’s very little information available about what kind of latency, throughput, and scale you can expect from Faust. I couldn’t find any blog posts or benchmarks covering this topic.

The Faust documentation states that a single-core Faust worker instance can already process tens of thousands of events every second. It is designed to be highly available and can survive network problems and server crashes. In the case of node failure, it can automatically recover.

However, there are a few unresolved issues around performance that might put some people off. For example:

- Issue #175 - a Faust application should be able to work indefinitely, but consumers stop running one by one until they've all stopped responding and the entire application hangs.

- Issue #214 - Faust sometimes crashes during rebalancing.

- Issue #306 - after being idle, producers are slow to send new messages, and consumers are slow to read them.

- Issue#247 - memory keeps increasing (and is never freed) even when Faust agents (functions that process data) aren’t doing any processing.

Quix Streams, on the other hand, is a much newer library so of course it has fewer open issues. The more users you have, the greater the probability that someone will find a bug. Nevertheless, it's worth noting that Quix Streams is built and maintained by Formula 1 engineers with extensive knowledge about data streaming and stream processing at scale. Under the hood, the library leverages Kafka and Kubernetes to provide data partitioning, consumer groups, state management, replication, and scalability. This enables Quix Streams deployments to reliably handle up to millions of messages/multiple GBs of data per second, with consistently low latencies (in the millisecond range). Thus, we haven't seen any performance issues so far.

Quix Streams provides the most optminal performance when it runs in containers on Quix Cloud—our fully managed platform for running serverless stream processing applications.

In any case, I encourage you to test both Quix Streams and Faust yourself, to see how well they perform at scale under the workload of your specific use case.

Ease of use

Choosing an easy-to-use stream processing solution lessens the complexity of dealing with streaming data and streamlines the development and maintenance of stream processing pipelines. Here’s how Faust and Quix Streams compare in terms of ease of use:

Faust and Quix Streams offer a purely Pythonic experience, with no DSL involved. This is great for any Python developer or data professional looking to leverage any of these tools. Beyond this similarity, Quix Streams is designed to match Faust in terms of user-friendliness and ease of adoption. Quix Streams also comes with extensive, regularly updated documentation, a rich selection of learning materials including tutorials and templates, and the availability of fully managed deployment options. It also enables you to develop and deploy stream processing applications faster, while keeping infrastructure management complexity to the bare minimum.

Integration and compatibility

Performance, ease of use, coding experience, and feature set are critical aspects to consider before choosing a stream processing solution. But so are built-in integrations and compatibility. Here’s how Quix Streams and Faust fare in this regard:

And here are some comments with additional details and context:

- Faust theoretically works with any Kafka broker (version 0.10 or later), be it managed by a vendor or self-hosted. In practice, though, you’d have to test to see how well Faust works with specific managed Kafka solutions.

- Quix Streams works with self-hosted Kafka brokers and has been extensively tested to ensure seamless compatibility with various managed Kafka solutions.

- Both Quix Streams and Faust are pure Python technologies without a DSL, giving you access to the wider Python ecosystem and making it easy to integrate libraries like Pandas, PyTorch, and scikit-learn into your workflows.

- Quix provides sync and source connectors for systems like InfluxDB, Redis, Postgres, Segment, and MQTT platforms, simplifying your integration process. This is not the case with Faust, which doesn’t offer ready-made connectors or a formal connector library for integrating with various external systems or databases.

Product maturity and support

How mature and well-maintained is the product? Is there a community of contributors and users who can help me if needed? These are questions you need to ponder when selecting any new software solution.

Faust faces a few challenges. Both its GitHub versions (the initial, now retired Robinhood project, and the newer, community-maintained fork) come with a number of open bugs and issues. As discussed, this is often indicative of a project’s popularity and wide adoption — the more a technology is used, the likelier it’s exposed to a wide range of operating conditions, user interactions, and integration scenarios, each of which can reveal new problems. Unearthing bugs is not a bad thing (quite the contrary). But as with any open-source library, it's unclear how long these issues will take to fix. As many of you know, maintaining an open source project on your own time is hard.

Like many companies in the streaming processing space, Quix Streams has the luxury of being backed by commercial entity that has a vested interested in maintaining it (much like what Apache Kafka is to Confluent or Spark is to Databricks). A team of developers is dedicated to fixing bugs, releasing new features, and enhancing the library on a regular cadence. There’s also a roadmap of planned improvements, the Quix Community is growing, and users get very fast responses to their technical questions (there’s even an AI assistant to help with questions about using Quix Streams).

A brief conclusion

Faust has the merit of being one of the earliest solutions that made it possible for developers and data professionals to implement stream processing logic using pure Python. However, it has a gotten a bit rough around the edges. Its repo has more than 100+ open issues, impacting things like performance, scalability, and processing semantics, and the documentation is only updated sporadically. It’s unclear what the future looks like for Faust, especially since the community involved in maintaining and improving the project isn't quite as active as it used to be.

If you’re looking for reliable, future-proof Faust alternatives for Python stream processing, I encourage you to check out Quix Streams. The team behind Quix Streams has dedicated resources to its maintenance, documentation, expanding its feature set. And you have the option of deploying your Python applications to a tailor-made runtime environment that integrates tightly with the Quix Streams Python library.

To learn more, check out the following resources:

- Quix Streams GitHub repo

- Quix Streams documentation

- Quix templates (pre-built projects and ready-to-run code to kickstart your app development process)

Check out the repo

Our Python client library is open source, and brings DataFrames and the Python ecosystem to stream processing.

Interested in Quix Cloud?

Take a look around and explore the features of our platform.

Interested in Quix Cloud?

Take a look around and explore the features of our platform.

.svg)