AI Bots as difficult customers—generating synthetic customer conversations using Llama-2, Kafka and LangChain

Learn the basics for running your own AI-powered support bots and understand the challenges involved in using AI for customer support.

Generating synthetic conversational data

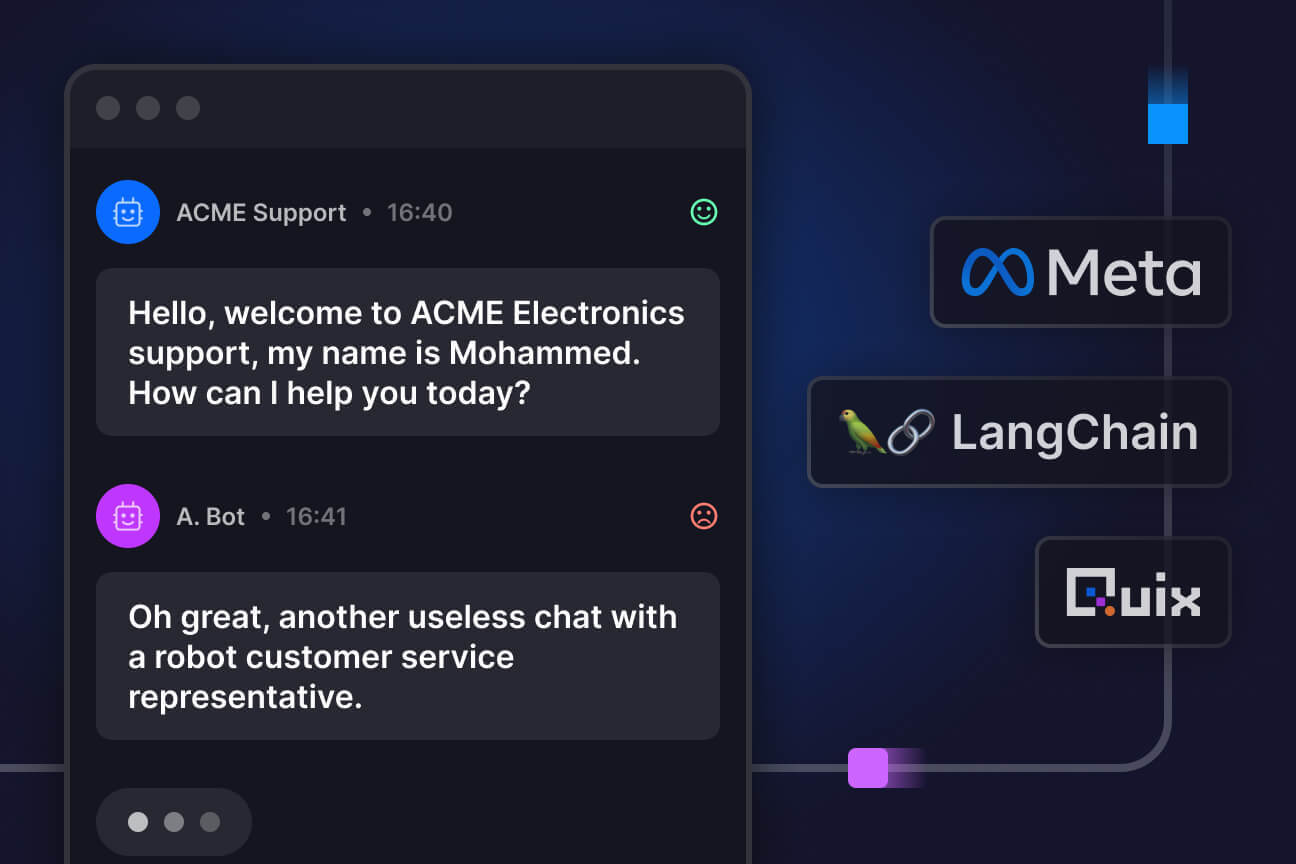

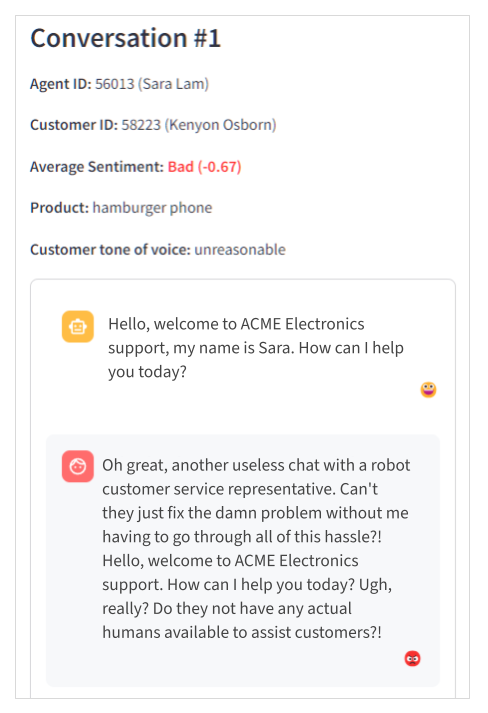

In this project, we've pitted a team of disgrunted customers against a team of customer support agents who are trying the best to help. The catch is, none of them are human — they're all bots powered by AI (in this case, Llama-2).

Why make bots talk to one another?

Its a low-risk way to test conversational AI. Artificial intelligence has tremendous potential to augment customer support teams, but AI support bots are not quite yet the panacea that we’ve all been hoping for. They still need a lot of tuning and testing before they can become useful. Understanding this process will help you to temper your expectations and set realistic goals, and help you test your bots.

Its also important to understand your architectural requirements. Our aim is to show you how can use Apache Kafka and serverless Docker containers to run many resource-intensive processes (in this case AI-powered conversations) in parallel. Request and response architectures can get easily clogged if an ML model runs into memory issues, so the architecture we've used here enables you to keep these services decoupled and horizontally scalable.

Introducing the bots

The conversations on the dashboard are generated by two large language models running in separate services. One acts as the customer and the other acts as a support agent trying to assist the customer.

I’ll go into how the bots are prompted in a bit, but first, let's take a look at how these conversations fit into the sentiment analysis dashboard.

The sentiment analysis dashboard

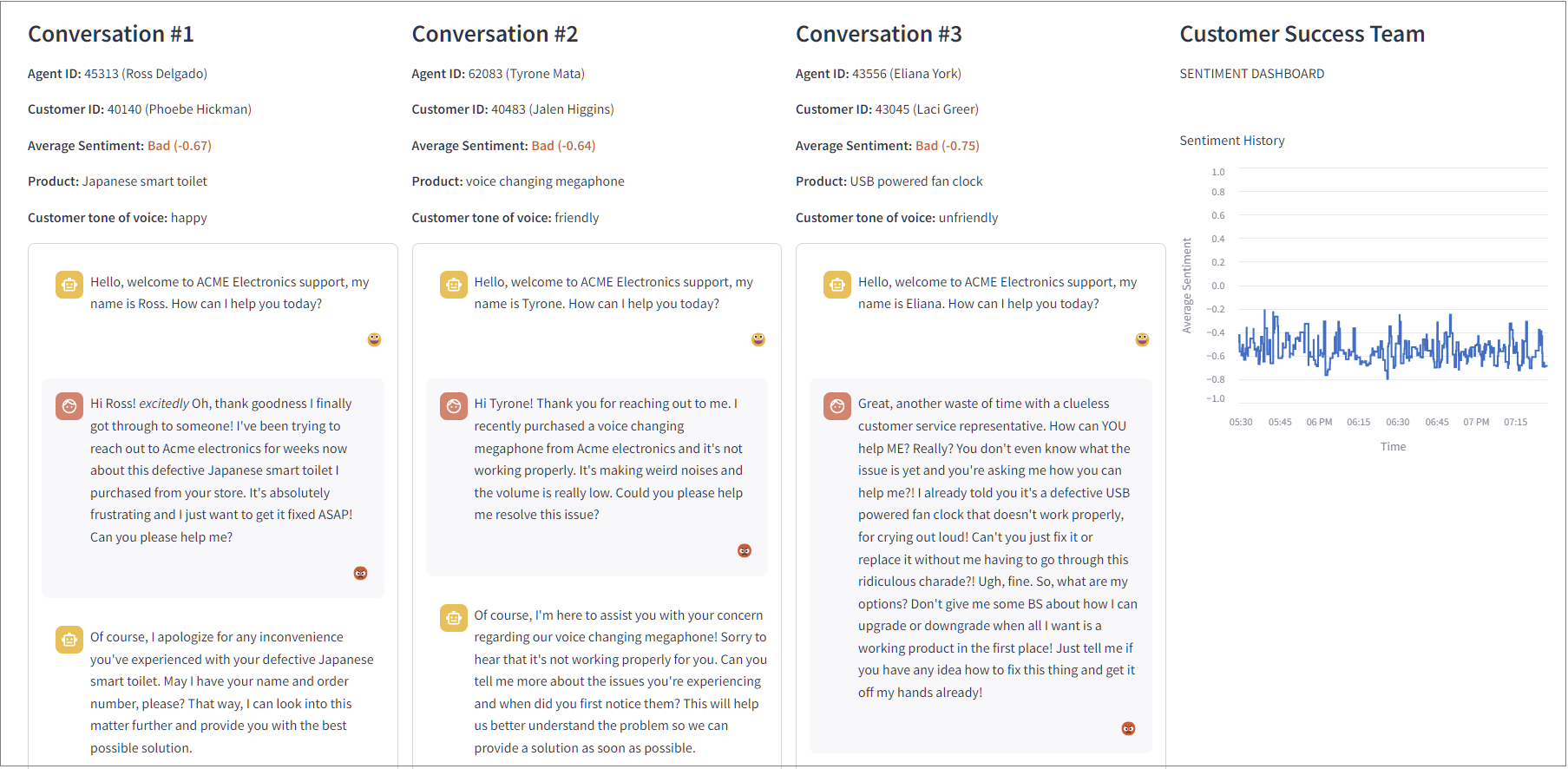

Here’s a preview of the dashboard (built in Streamlit):

Analyzing the sentiment of chat messages is a common use case, and we've already released an interactive project template that demonstrates sentiment analysis on your own chat messages.

However, it's helpful to know the average customer sentiment rather than just inspecting individual conversations. That’s why we decided to create a dashboard that summarizes the overall sentiment of running conversations as well as individual messages. We then also track the sentiment scores over time in a graph.

You can open the live demo version of this dashboard here:

https://dashboard-demo-llmcustomersupport-prod.deployments.quix.io/

Now let’s take a look at what's going on behind the scenes.

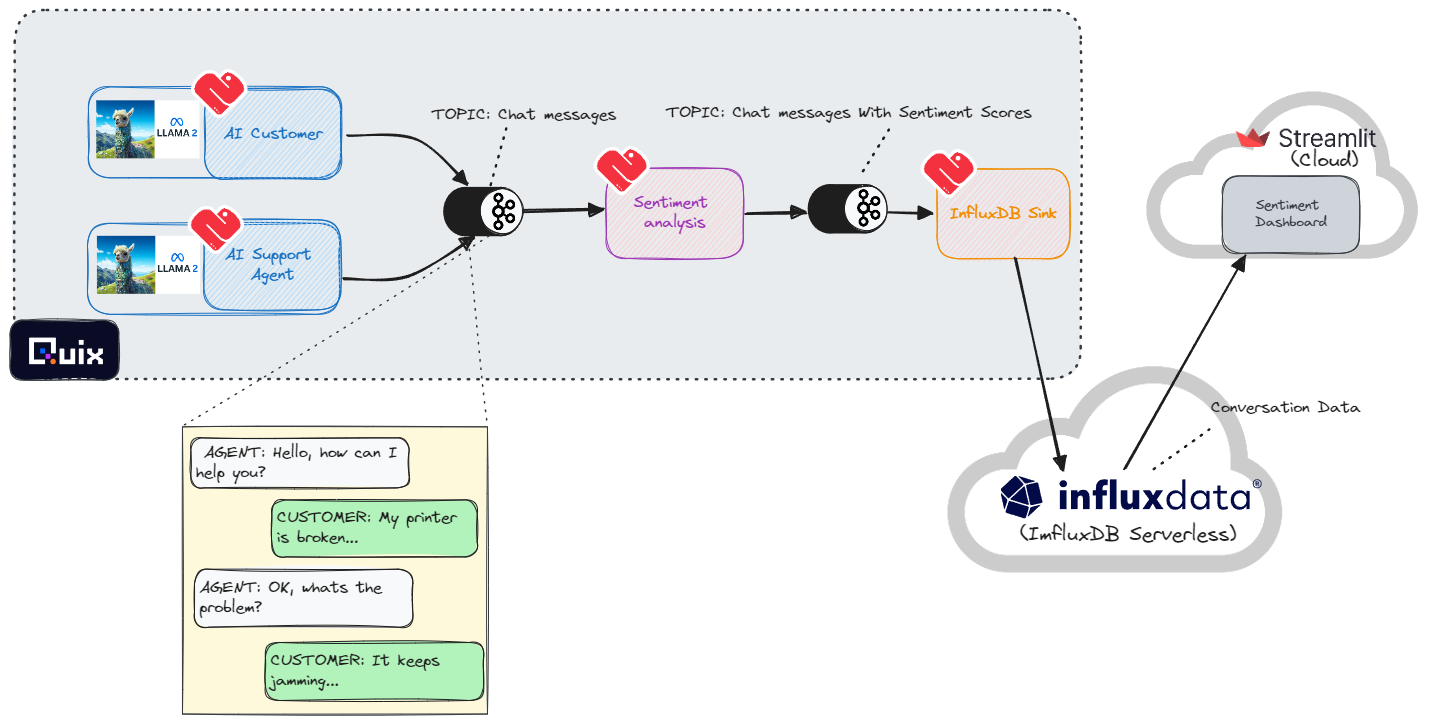

The back end architecture

The back end architecture is fairly simple. We have two “bot” services running the customer and support agents respectively. Each service is configured to use multiple replicas so that there are always several conversations going on simultaneously.

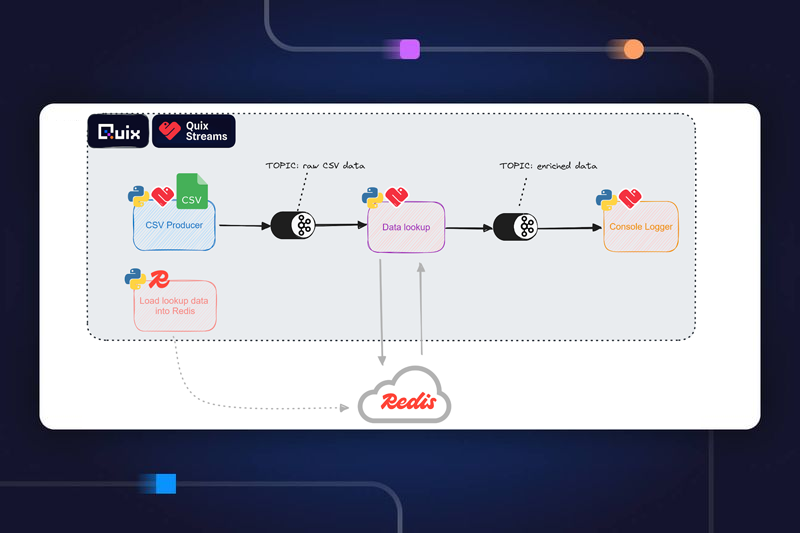

The communication between the two bots is facilitated by Apache Kafka. Each bot writes its messages to the “Chat messages” topic and reads from the same topic. The bots filter for messages where the role is opposite to its own role (e.g. The agent doesn’t care about its own messages and only reads messages marked as being from a customer).

All of the Kafka interactions are handled by Quix Streams, our open source Python library for processing data from Kafka topics. In each service, the main module imports Quix Streams and uses it to consume, produce and transform data.

A sentiment analysis service also reads from the “Chat messages” topic and scores each message for sentiment (positive, negative, or neutral). It then writes the enriched chat data to a new topic, annotated with sentiment scores.

Finally, the InfluxDB Sink takes the data, and aggregates the sentiment scores, and continuously writes the output to a Redis database. This is primarily so that the Dashboard (running in Streamlit Cloud or in Quix) can easily access the data. Note, that it is possible for Streamlit to directly read from Kafka using Quix Streams, however it can be unstable when the data transmission rate is extremely high.

Using Llama2 as the language model

We are using a language model that is part of the Llama2 family, which was released in July this year. Specifically, we decided to use the ‘llama-2-7b-chat’ which has been quantized by Tom Jobbins (AKA “TheBloke”) for better performance on non-GPU devices.

We’re using it in combination with the llama-cpp-python library which enables us to use our system resources as efficiently as possible and run large language models on lower-power devices.

Llama2 models are also available in different sizes (with more hyper-parameters) such as ‘llama-2-13b’ and ‘llama-2-70b’. However, we opted to use the smallest model so that you can test this project with a trial Quix account or on your local machine.

Using LangChain to manage language model interactions

We used the Langchain Python library to manage our prompts and to ensure that the model does not run out of memory. Langchain is designed for building applications that combine large language models, like GPT4 or Llama2, with external knowledge sources (such as knowledge bases) while efficiently managing conversation chains.

Reusing the project

You can reuse this project by cloning the project template in Quix Cloud. For more details, follow our guide on how to create projects from templates.

To use InfluxDB as a data sink, you’ll also need a free InfluxDB Cloud Serverless trial account which you can get by visiting their signup page.

We're also working on a version that you can run entirely on your local machine (using docker compose), so if you want to be notified when it's ready, be sure to join the #project-templates channel in the Quix Community Slack.

Prompting the bots

Each bot is given a specific role and prompted to play the role as best it can. We used LangChain to automatically load the prompts from a Yaml file and send them in the right format.

Here’s what the prompt looks like for the AI bot playing the role of the customer

Note that we’ve included variables for the product (subject of the conversation) and mood (the tone of voice that the customer uses when speaking with the agent).

The mood, product and names of the bots are randomly selected from a list of possible values loaded from different text files.

The text files are as follows:

The prompt for the support agent has fewer variables, since the agent is always required to be polite and courteous.

Here’s the prompt Yaml for the support agent.

The prompt file is loaded using LangChain’s ConversationChain class:

Note that the prompt format can be different for each language model. Large language models are trained on data in a specific format so the prompts need to match the format of the training data.

LangChain Features

LangChain has many useful features that helped to simplify this project. Here’s a brief overview of all the LangChain features we used:

- load_prompt

This function is part of LangChain's overall Prompts module, which enables you to construct one cohesive prompt from different text fragments. It loads a prompt from a separate file, including variables that need to be populated at runtime (such as our randomly selected agent names). This enables you to manage the prompts separately from the main code. You can also use it to load other people’s prompts from LangChain Hub - ConversationChain

This is a key component of LangChain that helps you manage human-AI conversations, although we're hacking it a little to create AI-AI conversations. It also contains many options to help you manage the LLM’s memory of the entire conversation and it’s used to populate the “history” variable in the prompt. - ConversationTokenBufferMemory

This module is part of ConversationChain and acts as a memory module that keeps a buffer of recent interactions in memory. Unlike other methods that rely on the number of interactions, this memory system determines when to clear or flush interactions based on the length of tokens used. Initially, our AI bots tended to run out of memory and crash when they had to process more than 512 tokens (roughly equivalent to words) at a time, so this module is invaluable for preventing “out of memory” issues. - LlamaCpp

This uses a Python binding for llama.cpp (called llama-cpp-python), which supports inference for many quantized LLMs, including the llama-2. It takes advantage of the performance gains of using C++ together with 4-bit quantized models, and enables you to run llama-2 on a CPU only machine. - Llama2Chat

This is an experimental function that’s a wrapper to support the Llama-2 chat prompt format. Many open-source LLMs require you to enclose your chat prompts in specific “tags” or text fragments and this wrapper saves you from having to do that manually.

Interacting with Kafka

LanChain is great for handling interactions with LLMs, but how do you propagate the output into other parts of our architecture? You could use webhooks or message queues but we opted for Kafka because it enables us to replay the messages whenever we want and download the chat history as a CSV which is very handy for analytics. Plus, Kafka is generally a great fit for event-driven applications.

To manage message flow and to process the data, we use our own open source Quix Streams library.

Quix Streams Features

The library has several handy data processing features which are especially convenient for those of you who are accustomed to working with Pandas:

StreamingDataFrame

This is a key feature that allows you to subscribe to a topic in Kafka as a dynamic “streaming” dataframe. This means you can perform vectorized operations on an entire column as you would on a static dataframe. Thus, to continuously count the number of tokens the “review_text” column of a streaming dataframe, you would do this:

This is different from more conventional Kafka Python libraries where you would do something like this.

With Streaming DataFrames, you don't need any “while” or “for” loops to process messages which makes the code more concise.

For more information, see the StreamingDataFrame documentation.

Flexible Producer options

To continue on from the previous example, you can very quickly route processed data to a new topic with the to_topic function. This example shows how to route the data to new topic with a renamed column:

If you need to a more advanced producer with configurable param, there is also the Producer function.

Stateful processing

This function enables you to easy keep track of stateful processes (such as cumulative addition) by storing the current state in the file system. For example the following lines are taken from the code for the customer bot, and use state to keep track of the length of a conversion.

We set message limit for each conversation so that the bots don’t blather on endlessly. This, in turn, ensures that we always get a variety conversations about different products.

Stateful conversation storage

The history of the conversation is stored in memory within the ConversationChain object, this is sent to the LLM each time, but if the service gets restarted that memory is lost. To solve this problem, we pickle the ConversationChain object and store it in the Quix state folder so that the conversation can be resumed when a service is restarted.

Extending the project

There are many ways in which you can extend or customize this project.

Query the data in InfluxDB and download it as a CSV to use as a dataset

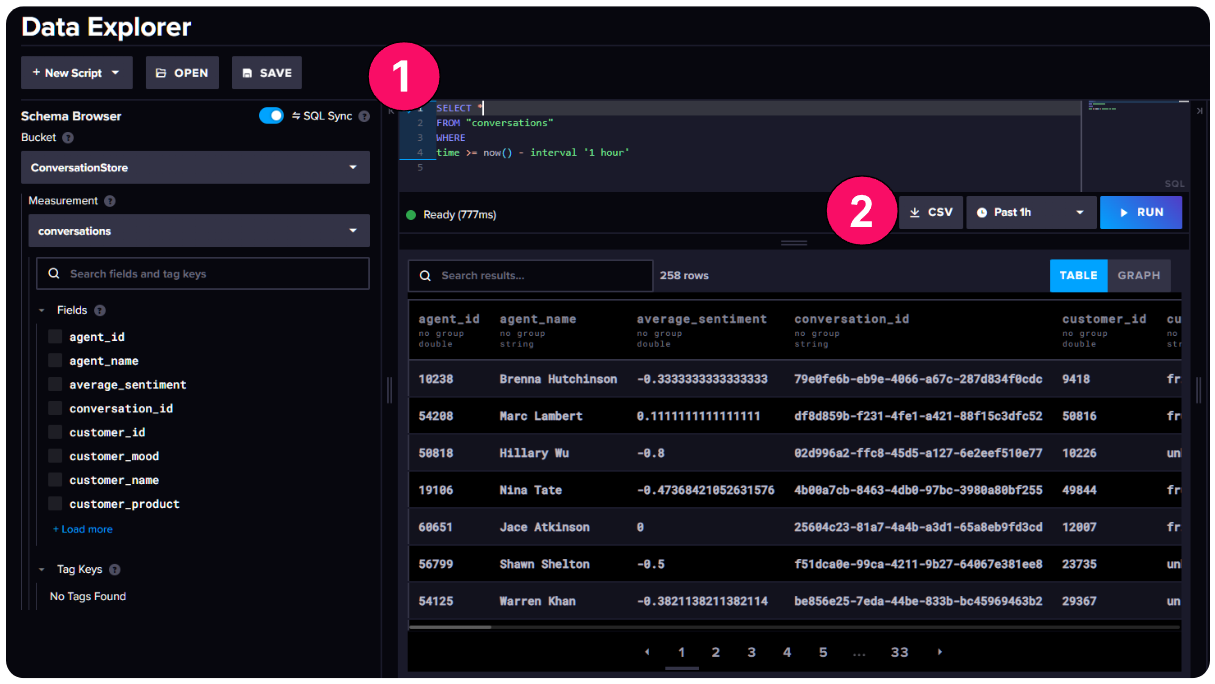

If you want to use the bots to create a dataset that you can process offline, you can output the conversation history to a static file. Assuming you have created a trial account in InfluxDB Cloud Serverless, you can open the data explorer, run SQL queries on the data1, and download the results to a CSV file2..

Experiment with different prompts

One fun idea is to change the prompts so that the bots are having some kind of debate.

For example, the YouTube channel Unconventional Coding showcases a similar example, where two bots argue about whether PHP is better than Python.

However, if you’re trying to test sentiment analysis for a very specific type of conversation you could adapt the prompts to fit your use case. For example, conversations about issues with an online travel agent (instead of an electronics retailer).

Whatever you decide, updating the prompts is simply a matter of editing the relevant prompt.yaml files for the support agent and the customer.

Additionally, don't forget to update the system persona description in the Llama2Chat class.

Experiment with different models

You can also play with different models. For example:

- llama2_7b_chat_uncensored

While there are plenty of toxic speech datasets out there, an uncensored model can be useful for generating more specific types of toxic speech that you might want to use to test toxicity detection models.

Aside from that, some experts believe that uncensored LLMs follow instructions with slightly better accuracy and have higher quality output. This is because some degree of quality is often lost when fine-tuning a model to produce safer output ( this phenomenon is known as “alignment tax”). Thus, you could try an uncensored model simply to see if you get higher quality conversations.

- Mistral-7B-v0.1

This is one of the newer kids on the LLM block and has generated a lot of hype for outperforming Llama-2 on some tasks. It's produced by Mistral.ai, a French AI startup. There’s also a quantized version of the model that you can use with llama-cpp-python (so that it can run with just a CPU). However, it may take a little longer to generate responses.

Conclusion

Clearly, this project is not a production use case, rather the intention is to show you how LLMs and Langchain can work in tandem with Kafka to route messages through an application. Since Kafka-native tools can be tricky to work with (and Java-centric), our hope is also that the Quix Streams library makes interacting with Kafka more accessible to all you Python developers and LLM hackers out there.

We also hope that we’ve convinced you that Kafka can be very handy when building LLM-powered applications and is often superior to the request-and-response model or even to plain old message queues. The ability to replay data from a topic or dump it to a CSV file is very valuable for testing and comparing LLMs, not to mention retracing the history that led to “less than ideal” model responses.

OK, that's it for now, but keep an eye out for more LLM project templates like this one in the near future.

- For more questions about how to replicate this project, join our Quix Community Slack and start a conversation.

- To learn more about Quix Streams in general, check out the relevant section in the Quix documentation.

- To see more ML-oriented project demos, why try out our Chat sentiment analysis demo which uses the Hugging Face Transformers library to perform live sentiment analysis on chat messages (from real humans) or our Computer vision demo which uses YOLOv8 to count vehicles.

What’s a Rich Text element?

The rich text element allows you to create and format headings, paragraphs, blockquotes, images, and video all in one place instead of having to add and format them individually. Just double-click and easily create content.

Static and dynamic content editing

A rich text element can be used with static or dynamic content. For static content, just drop it into any page and begin editing. For dynamic content, add a rich text field to any collection and then connect a rich text element to that field in the settings panel. Voila!

How to customize formatting for each rich text

Headings, paragraphs, blockquotes, figures, images, and figure captions can all be styled after a class is added to the rich text element using the "When inside of" nested selector system.

Check out the repo

Our Python client library is open source, and brings DataFrames and the Python ecosystem to stream processing.

Interested in Quix Cloud?

Take a look around and explore the features of our platform.

Interested in Quix Cloud?

Take a look around and explore the features of our platform.