Claude Code wouldn’t behave, so I built a workflow engine to tame it

Discover how Klaus Kode turns Claude Code into a reliable workflow engine, blending agentic coding with simple API calls to build data pipelines

When it comes to coding, the Claude Code CLI is a tremendous time-saver. But it’s hard to control. Even with the most well thought-out prompt and context engineering. It still forgets things and goes on wild goose chases.

Developers know this and there is a cottage industry for orchestrators, tools and prompt frameworks for working with Claude Code. You can find many examples of these in the Awesome Claude Code GitHub.

Unfortunately, I couldn't find what I was looking for in the existing ecosystem of customizations, so I built my own (it's called Klaus Kode)

I want to explain what it is and why I built it so that anyone with a similar use case can benefit from it. I’m also curious if anyone out there has been trying to tackle similar challenges to the ones I encountered (with more than just prompt/context engineering).

Trying (and failing) to make Claude follow step-by-step workflows

For the past few months, I’ve been trying to get Claude Code to follow a very specific workflow. Obviously it involves generating code based on a requirements prompt but there are other steps too:

- I want it to update environment variable files, and dependency definitions.

- I want it to upload the code to a cloud sandbox and install the dependencies, run the code.

- Then I want it to download the run logs and tell me if the code is doing what it is supposed to do.

- Assuming the code looks good, I want to deploy it as a containerized app.

All these steps need to happen in a particular sequence, and they involve using APIs for interacting with the cloud sandbox.

There were also variations on the sequence depending on the type of app that I wanted to build. So, I need Claude Code to be able to handle multiple workflow variants.

- I tried hard to get Claude Code to follow an exact sequence and use the correct API endpoints at the right times. But I simply could not get it to follow the workflows consistently.

- I tried nested prompt files such as “master_workflows.md” which branched out to sub workflow prompts such as “workflow1.md” and “workflow2.md” depending on what kind of app I was trying to build.

- I also built an MCP server for the cloud sandbox platform API. I then included hints in my workflow prompts about which tool to use and when.

It all worked “OK” but was very brittle and Claude would occasionally get confused and follow the wrong step or call the wrong tool. It simply wasn't reliable.

It was also extremely slow.

I had to wait an eternity for Claude to think about the workflows and ponder what tools to use. The complex prompting and MCP tool descriptions also caused context bloat and meant that Claude was overwhelmed with information.

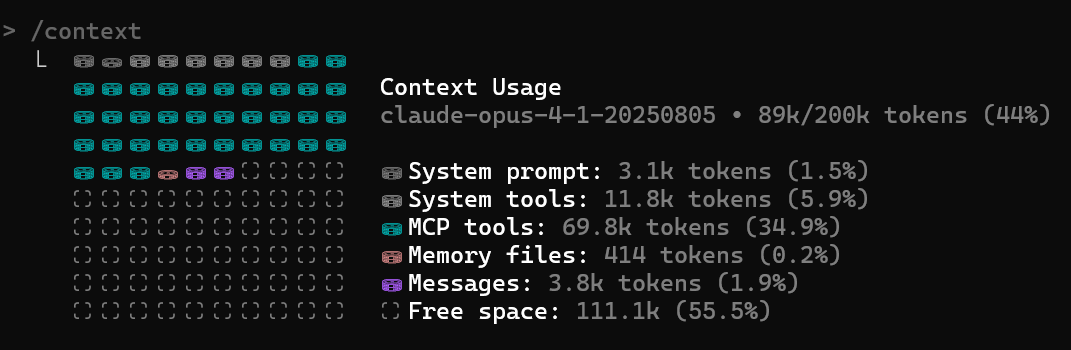

For example, check this screenshot of my available context after starting a conversation in Claude Code:

MCP tool descriptions are taking up 34% of my context before I have even started any work!

OK, I confess, over time, I’ve added a bunch of MCP servers to kick the tires and see if they’re useful. Nevertheless, even the one single MCP server that I built for Claude to use as part of my workflow still uses a lot of context.

Then it occurred to me: I really don’t need to tell Claude to call APIs for me. Why waste all that time and all those tokens?

I know what API endpoints to call and when. The workflows are fixed, not freeform tasks (where you would normally need Claude to figure out what MCP tools to use ad hoc).

I just needed Claude to stick to what it does best: Generate code and update files using the tools that it already has built in. I can just call the APIs with deterministic code.

Integrating the Claude Code into a fixed workflow with OpenAI and Claude SDKs

After playing around with a few different agentic frameworks, I decided to use OpenAIs Agents SDK because it was extremely easy to use. I had initially considered giving the coding work to an GPT-powered agent and let it use tools instead. I also used other simpler agents (no tools) for analysing log files.

However, after much testing and comparison, I realized that Claude Code was still the best at using tools and completing coding tasks. Thus, I attempted to integrate Claude Code into my workflow application (which was originally designed for OpenAI Agents).

This was surprisingly straightforward since the OpenAI Agents SDK is flexible enough to handle models from other providers. You don't necessarily need to use any OpenAI models at all.

However, to control Claude Code from within another program, I also had to add the Claude Code SDK. I used the SDK to ask Claude Code to create a Python app and when it is finished, hand control back over to the workflow engine so it can go to the next step. The next step was usually this: upload all the code, variables and dependencies to the cloud sandbox, run the app, and download the logs. This was all done using “deterministic code” that called the correct APIs in a predefined sequence.

So the entire workflow ended up being a mixture of agentic steps (“write this code and change these files according to the requirements”) and deterministic steps (call API “A” after the code generation step has finished).

Going from gigantic prompts to bite-sized tasks

At the beginning of my journey I had expected that Claude Code could behave like a super-intelligent autonomous coding agent that could implement a Python application from conception all the way to deployment. In other words, I thought I could prompt it to “read these docs, then build this code, then use these MCP tools to test it, and if it works, use these other MCP tools to deploy it.”

The reality is that it needs a lot of human intervention and nudging at each step. And I don't really want it calling APIs for me (unless it's someone else's API that I have no idea how to use).

Instead, I broke the whole job down into smaller steps so that Claude only gets the context that it needs to implement the coding step (docs, code samples, etc…). Also there are multiple “Claudes” that get spawned at different steps and each one is looking at the task with fresh eyes. There is no long conversation history and thought process to maintain over the steps.

Klaus Kode is born

Since I ended up with a tool that was kind of a “parent” to Claude Code, I called it Klaus Kode (I also live in Germany where “C-words” such “Class”, “Culture” and “Camera” all acquire a “K” in German…so why not “Klaus”).

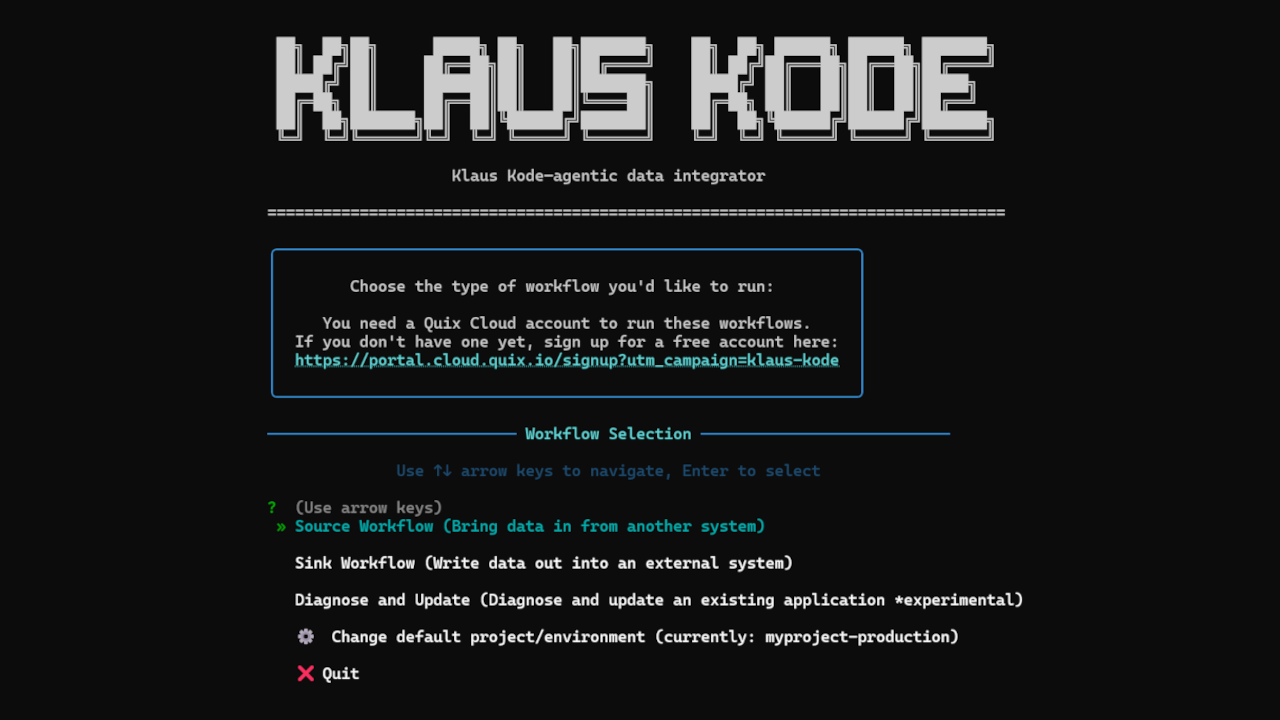

It's a CLI tool that guides you step-by-step (wizard style!) through specific workflows that center around coding and deploying Python applications.

Now is a good time to disclose here that Klaus Kode is built for a very specific use case and designed to interact with a particular platform (where it runs the code).

As I state in the project’s readme, Klaus Kode is for engineers and other technical roles who need to build data pipelines but don't have time to build integrations from the ground up. But this isn’t the kind of data pipeline that cron jobs or webhooks can typically handle.

Klaus Kode is designed for working with high-fidelity data sources. These could be continuous telemetry streams, blockchain transaction feeds, or large static datasets that need to be ingested and processed in a distributed manner. Of course you could still use it to download a CSV file at 9am each day and process the data but that’s kind of like using a sledgehammer to crack a walnut. Luckily, the platform is so simple that you won't feel like you’re using a sledgehammer at all.

Creating Python applications in Quix Cloud

Klaus Kode’s main job is to help users create Python applications that run in the Quix Cloud platform. The Quix platform allows engineers to build and run high-throughput data pipelines and consolidate data from multiple sources.

This makes it ideal for industrial applications where you have scattered measurements and configurations from wind tunnels, climate chambers, and simulators. Traditionally, this data was only available through lengthy batch exports, usually ending up as CSVs buried in emails and labyrinthine network drives. With proper data engineering, you can use that data more effectively. Yet, in these kinds of companies, there’s often not enough dedicated data engineering resource to go around. So data plumbing tends to be a bit of a side project for engineers whose main job is to build and design physical systems (engines, pumps, cooling systems, and so on).

Thus, Quix, and by extension Klaus Kode, are designed to help those folks build production-ready data pipelines faster without having to read reams of technical documentation.

Klaus Kode is also designed around the tech stack that Quix uses. For example, Kafka topics are used as means of exchanging data (rather than simple message queues) because Quix needs to handle high-volume sensor data. Quix also uses Kubernetes under the hood, so Python applications are deployed in Docker containers and can be scaled horizontally with replicas. Kafka and Kubernetes are quite complicated technologies so all that complexity is hidden under a layer of abstraction and only the most important functions are exposed via APIs.

So earlier, when I said “Klaus runs the code in a sandbox” what I mean is that it calls a bunch of Quix APIs so that the code runs in a development container running in a Kubernetes cluster. You just don't have to worry about any of that low-level junk because Klaus and Quix take care of it for you.

You don't need MCPs or a complex prompt system to orchestrate a workflow

That's pretty much the main learning from this whole experience. I am not an expert on agents (hell, I’m not really a developer either). Klaus Kode is indeed agentic, yet in a limited sense (the steps powered by Claude Code are where the real agentic work happens). But what I have discovered is that you should only give Claude Code (or any other “super agent”) the context and tools it needs to perform a discrete task in a workflow.

I think it’s unwise to overwhelm any AI tool with directories full of prompt files and tool descriptions as soon as they start up. And, in my opinion, you should only use agents when you really need them, not to make simple API calls. If the API returns something unexpected that causes an error, sure maybe you can hand it off to an agent to figure out how to call the API properly, but for me, just regular deterministic Python functions usually do the trick.

Anyway, I hope laying out my approach gives you some inspiration for your own projects. I’m very curious to see who else has successfully used the Claude Code SDK to integrate Claude Code into enterprise systems. If you’ve been trying to bend it toward real production use cases, I’d love to hear about your experiences.

Check out the repo

Our Python client library is open source, and brings DataFrames and the Python ecosystem to stream processing.

Interested in Quix Cloud?

Take a look around and explore the features of our platform.

Interested in Quix Cloud?

Take a look around and explore the features of our platform.

.svg)