Automate the manual work that's limiting your test throughput

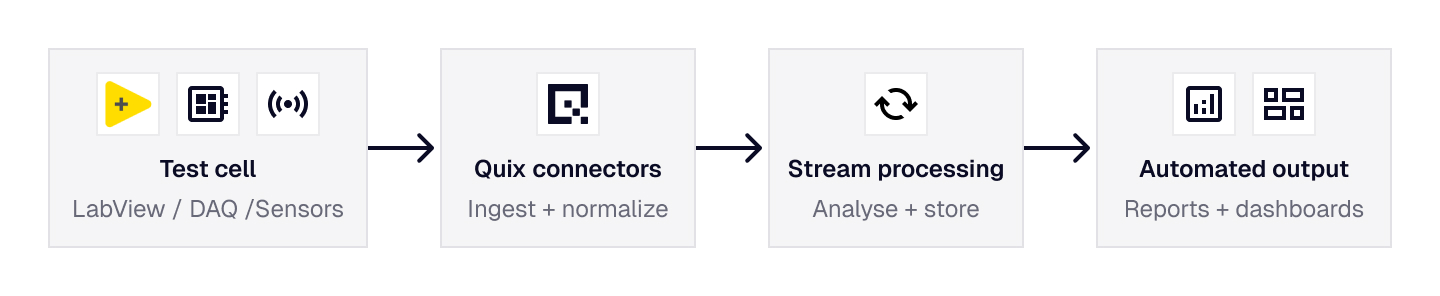

Quix automates the data pipeline around your tests, from ingestion and analysis through storage and reporting, so your time goes to engineering decisions, not file handling.

Manual overhead is hard to fix with your current tools

You've probably tried to automate parts of your test workflow before. The reason it's difficult is that the tools weren't designed for it.

Your systems weren't designed to talk to each other

Post-processing is bolted on after the test

No feedback loop during execution

Reporting is still a manual project

'How to Load & Analyze Engineering Data Faster with Modern Software Principles'

What changes when the data overhead per test shrinks

Stop tests when you have the answer

Reports waiting for you in the morning

Monitor multiple rigs from one station

Dynamic parameters, fewer calibration runs

What automated test workflows look like with Quix

Connect your systems once, data flows automatically

Quix provides pre-built connectors for MQTT, OPC-UA, InfluxDB, and LabVIEW, plus a Python framework for custom integrations. You wire up your data sources once. After that, every test run automatically ingests, normalises, tags with metadata, and stores the data. The export step and manual file handling disappear entirely.

Every test run linked to its full configuration

Quix captures the complete configuration state at the start of each run: software versions, rig hardware settings, environmental setpoints, instrument calibrations. That snapshot is stored as structured metadata attached to the time-series data. When you need to reproduce a result or understand why two runs differ, the configuration context is already there. No reconstructing what was running from memory or separate systems.

Processing runs in parallel with the test

Instead of waiting until after the test to process data, Quix runs your analysis logic against the live sensor feed. Data cleaning, unit conversion, derived channel calculation, and threshold monitoring all happen as data arrives. When the test ends, the processed data is already in the format you need.

Event-driven automation after each test

Quix detects when a test completes and can trigger downstream processes automatically: generate a standardised report, calculate parameters for the next run, update a dashboard, or send an alert. The sequence from "test complete" to "report ready" happens without human intervention.

Sits alongside your existing test cell infrastructure, doesn't replace it

Quix connects to your DAQ systems, test executives, and data acquisition hardware through standard industrial protocols. LabVIEW, TestStand, and your proprietary test control systems stay in place. Quix doesn't touch your control logic or certified sequences. It automates the data handling that happens around them. Your test cell setup stays the same; the manual pipeline around it disappears.

.svg)