Understand test data anomalies in seconds

Quix links your time-series data to configuration records, maintenance history, and duty cycles in a single queryable system. When you spot an anomaly, the full context is already attached to the data.

The tools you have weren't built to work together

You start every troubleshooting session with the same question: is this real, or is something wrong with my equipment? Answering that should take seconds. Instead it takes an hour of cross-referencing because your information lives in five different systems that don't talk to each other.

Your maintenance record is chat messages

Duty cycle tracking is a manual spreadsheet

Your asset management tools don't integrate with your test data

You can't rule out simple causes quickly

'How to Load & Analyze Engineering Data Faster with Modern Software Principles'

What changes when context is built into the data

Rule out equipment issues in seconds

Duty cycles tracked automatically

Scales as your facility grows

Investigations preserved for the next person

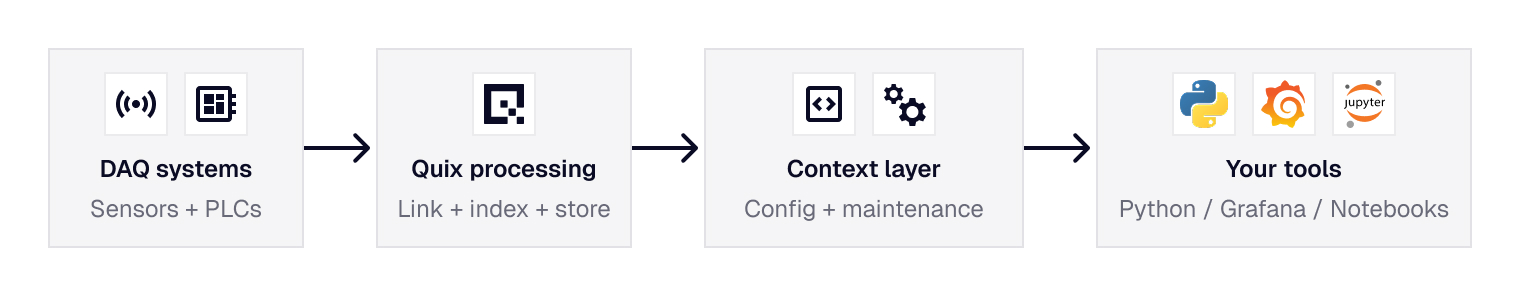

How it works

Quix captures the full configuration state at the start of every test run: serial numbers installed, software version, rig settings, environmental setpoints. Service events, component replacements, and duty cycle counters are ingested alongside sensor data. Everything is stored as structured metadata attached to the time-series, so you can query across your full test history ("show me every run where this parameter exceeded threshold on rig 3 in the last six months") and get results in seconds.

Your existing analysis tools stay in place. Quix makes them more effective by ensuring the data arrives pre-indexed with context attached. If you work in Python, Grafana, Marimo Notebooks or custom dashboards, your workflow stays the same.

.svg)