Why your test data ingestion is broken (and how to fix it)

Test rigs use diverse data interfaces such as CSV files in local file systems or real-time data via MQTT or tools like LabVIEW.

How long did your team spend last week trying to find test data from that battery thermal run three months ago?

If you're like most R&D teams, someone eventually found it buried in a spreadsheet with a cryptic filename, assuming it wasn't lost when someone left the company. This isn't just frustrating, it's expensive. Critical test data gets dumped on local hard drives, shuffled around on flash drives, and stored wherever seemed convenient at the time.

Thomas Neubauer, CTO and co-founder of Quix, has spent his career dealing with sensor data from F1 cars, emergency fleet vehicles, and high-speed railways. His recent masterclass on test data ingestion reveals why most R&D teams are stuck with fragile, desktop-based workflows that create more problems than they solve.

Most test facilities are stuck in the flash drive era

Walk into any test facility and you'll see the same setup. Someone's got a laptop connected to the test rig - maybe it's a wind tunnel for drone prototypes or a thermal chamber for battery testing. They run some proprietary desktop application, dump the data locally, then transfer it via flash drive to wherever seems logical that day.

"In the worst case, you're dumping data on the laptop hard drive and people are using flash drives to move data around," Thomas explains. Even when teams upgrade to shared network drives, the fundamental problems remain.

The slightly better approach involves collectors like Telegraf connecting directly to test rigs and syncing to databases. But this creates its own issues. When data lands in the database, batch jobs pull it back out for post-processing, normalization, and down-sampling because the raw machine data isn't practical for domain experts to use.

This approach has a serious problem: if something goes wrong during ingestion, your data is gone forever.

Why F1 teams get this right

Thomas's work with Formula 1 telemetry systems offers a blueprint for handling complex test data. F1 teams collect sensor data from three sources: cars on track, wind tunnels, and simulators. Some sensors produce over 1,000 data points per second, seriously high volume.

The important insight? Get your data to a centralized platform as quickly as possible. "We're trying to minimize the number of ways you can make mistakes," Thomas notes. "If you make a mistake in the ingestion pipeline on premise, the data is usually lost. But when you make mistakes later on, you can reprocess the data and recover."

This means running a local gateway (like an MQTT broker) on premise, then immediately streaming data to your cloud platform. The raw data gets stored in a blob store for reprocessing, while processed data goes to your time-series database.

The configuration nightmare

Test data without context is useless. Imagine you're testing different battery chemistries in thermal chambers. Each test run has complex configuration metadata: which battery manufacturer, what temperature profile, which charging protocol.

Most teams handle this wrong. They either send configuration data with every sensor message (wasting massive bandwidth) or send it once at the beginning. The second approach creates a bigger problem - any service that joins the stream later has to be stateful, which is complicated and expensive.

The better approach separates time-series data from configuration data entirely. Use different channels and different databases. Time-series databases excel at handling sensor data but struggle with complex configuration metadata. When you need both, join only the specific configuration fields you actually need.

This separation enables experiment management layers that let you overlay different test results against configuration changes. Sometimes you discover that higher probe temperatures correlate with a different ink manufacturer in your toner cartridge - insights you'd miss without proper data organization.

The MQTT data transformation challenge

MQTT delivers data in a specific format that works well for the protocol but poorly for analysis. A typical topic structure looks like machine_id/sensor_name with the sensor value in the payload. For a 3D printer, you might see:

3d_printer_1/bed_temperature: 65.2

3d_printer_1/nozzle_temperature: 210.4

3d_printer_1/print_speed: 50This row-based structure needs transformation to columns for practical analysis. The first step involves parsing the topic to extract machine name and sensor ID, then converting rows to columns. You end up with something more useful:

timestamp | machine | bed_temperature | nozzle_temperature | print_speed

But this is just the beginning. The data still has gaps and timing issues that require stateful operations like down-sampling and interpolation.

Building resilient ingestion pipelines

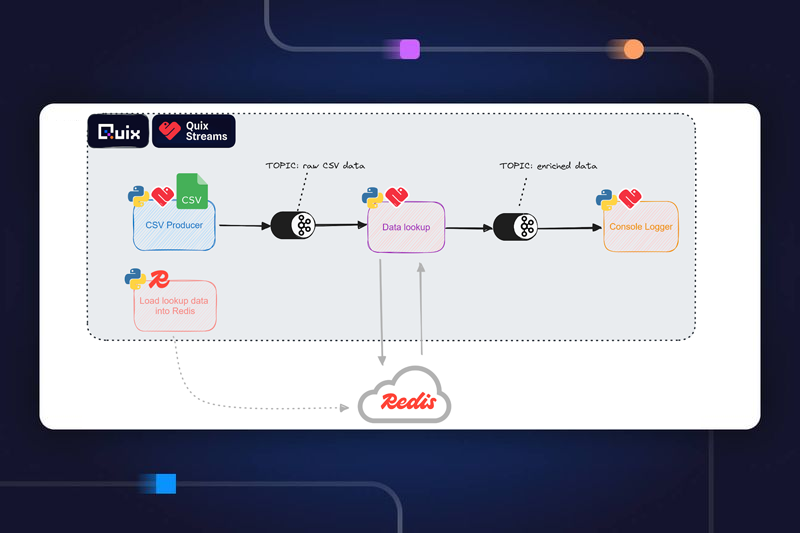

The demonstration in Thomas's webinar shows how to build fault-tolerant ingestion using the Quix Framework. The architecture uses Kafka topics as a safety buffer between data sources and processing services.

When MQTT data arrives, it immediately goes to a "raw" Kafka topic with retention settings (like 50MB of historical data). This creates a safety zone where you can replay messages if downstream processing fails. If a transformation service crashes while processing critical test data, you can restart it and reprocess from where it left off.

The same principle applies to HTTP endpoints for older machines that can't handle MQTT. A simple Flask service receives HTTP posts and immediately produces them to Kafka topics. No processing logic in the API layer - if something breaks, the data remains safe.

Multiple data sources, unified schema

Real test environments mix protocols. Your newer drone test rigs might support MQTT, while legacy HVAC test chambers only offer HTTP APIs or file dumps. The solution is abstracting your data organization from specific source technologies.

Create normalization services that convert different input formats to a common schema. When you upgrade your data acquisition hardware, you won't need to rebuild everything from scratch. Your downstream analytics and storage systems remain unchanged.

This flexibility matters when you're managing test data from rockets, autonomous vehicles, or industrial robots where equipment lifecycles span decades.

Making it practical

The technical implementation matters less than the architectural principles. Get data off local machines quickly. Store raw data for reprocessing. Separate time-series from configuration data. Use message queues as safety buffers.

Teams that implement these patterns report significant improvements: faster access to historical test data, better cross-team collaboration, and the ability to rerun analysis when requirements change.

Your test data represents months of expensive prototype development and validation work. Don't let it disappear into the flash drive graveyard.

Ready to modernize your R&D data infrastructure? Explore how centralized streaming platforms can transform your test data workflows from fragile desktop processes into reliable, collaborative systems that scale with your engineering teams.

Check out the repo

Our Python client library is open source, and brings DataFrames and the Python ecosystem to stream processing.

Interested in Quix Cloud?

Take a look around and explore the features of our platform.

Interested in Quix Cloud?

Take a look around and explore the features of our platform.

.svg)